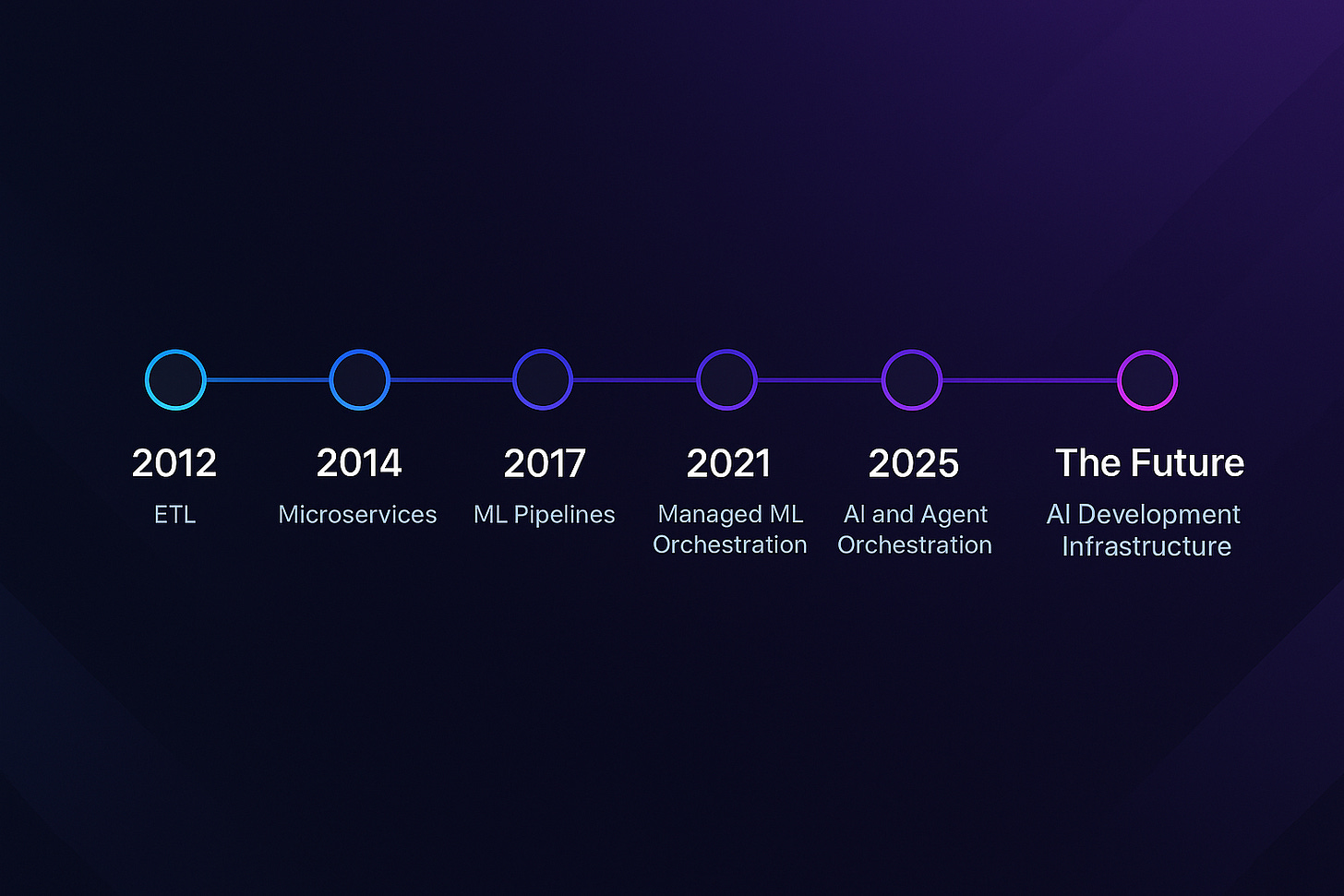

The Evolution and Future of AI Orchestration

Let’s chart the history of orchestration and explore why it has become a non-negotiable for the future of AI and agents.

The term orchestration has evolved dramatically over the past decade. What began as a way to sequence microservices and data processing jobs has expanded into a core enabler for modern applications, spanning traditional backend systems to highly dynamic, intelligent agentic workloads.

Today, we’re witnessing the early days of a new kind of orchestration that doesn’t just move data or call services but thinks, adapts, and reacts in real-time. Let’s chart the history of orchestration and explore why it has become a non-negotiable for the future of AI and agents.

Spoiler alert:

The real bottleneck isn’t models or compute - it’s us.

Models are moving from “what runs next” to “what thinks next,” and that’s forcing a new, powerful layer of our tech stacks to take shape. But to understand what’s inevitable, we need to understand what got us here.

1. The Birth of Orchestration: ETL and Microservices

2012 - ETL

Orchestration first emerged to provide ETL (Extract, Transform, Load) pipelines, where schedulers like Airflow managed ingestion and transformation workflows across large data warehouses.

2014 - Microservices

As leveraging ever-growing collections of data became more critical to innovation, the need for microservice orchestration emerged, where systems like AWS Step Functions or Cadence provided durable execution of service calls and retries in transactional systems.

In these early cases, orchestration primarily focused on sequencing, or determining when and how to call a function, run a script, or trigger a container. The compute-heavy lifting was offloaded to traditional compute engines life spark, AWS Batch, GCP cloud run, or later k8s. Orchestration didn’t touch the compute, and it didn’t need to.

2. Machine Learning: Orchestration Meets Compute

2017 - ML Pipelines

ML introduced new requirements: expensive compute and resiliency.

Unlike microservices or batch ETL, ML workflows are long-running processes tightly coupled to infrastructure. GPUs, now more heavily relied upon especially for the demands of ML training, are more expensive than CPUs. Training models, evaluating them, tuning hyperparameters all require dynamic allocation of GPU or CPU resources. And because these processes take much longer to run, resiliently recovering from failures became much more important.

These needs spawned ML pipeline orchestrators like Flyte, which are still the open-source orchestration solutions most teams rely on today.

2021 - Managed ML Orchestration

As ML orchestration became more sophisticated, it also became a heavier burden on the ML, data, and platform teams who needed to maintain infrastructure. They needed orchestration systems that defined the DAG (directed acyclic graph), provision resources, manage job lifecycles, and scale to zero cleanly.

Orchestration platforms like Union.ai, Prefect, and Airflow emerged to offload the infrastructure burden of orchestration. As the age of AI has taken shape, they’ve become much more popular for teams building AI/ML workflows as a critical part of their work.

3. Agentic Systems: Orchestration in the Age of AI

2025 - AI and Agent Orchestration

We’re now entering a new phase: orchestrating intelligent agents and AI systems.

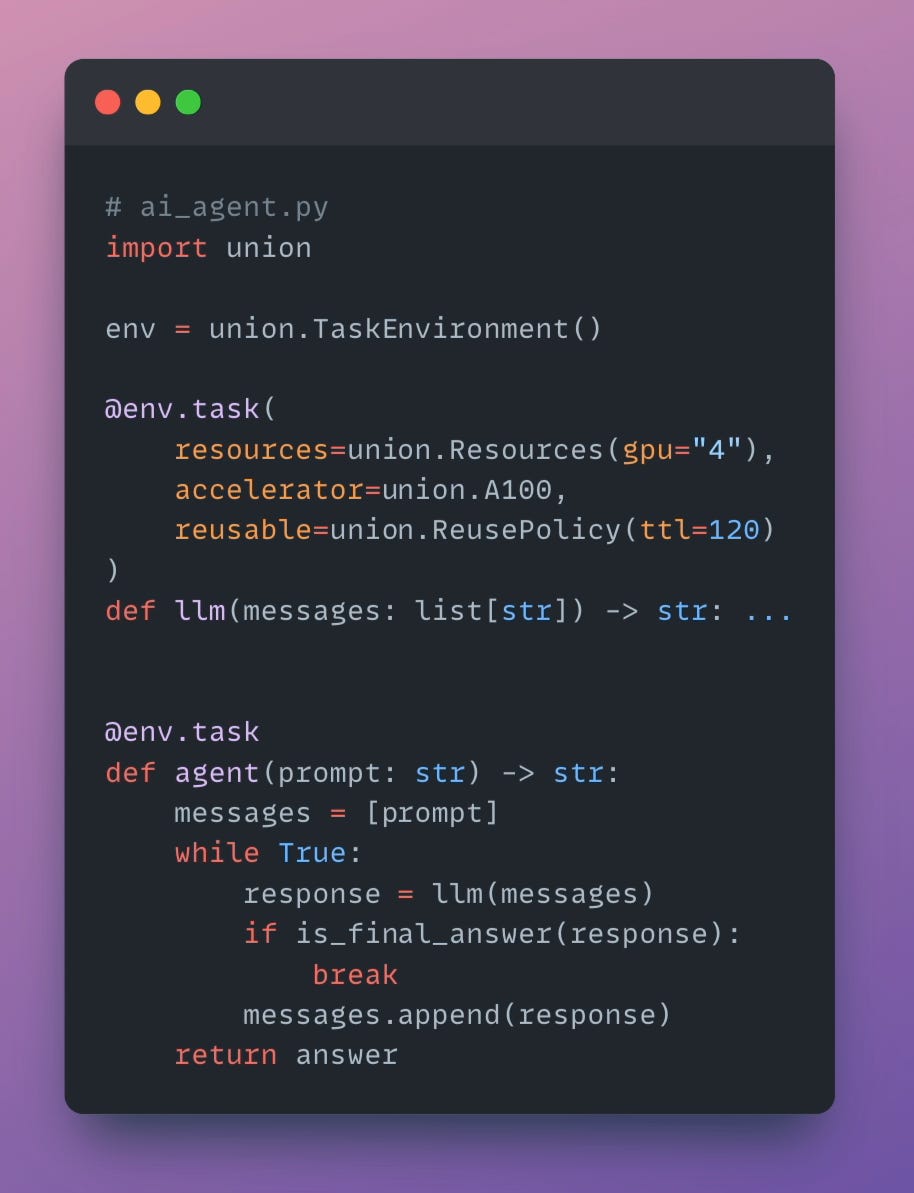

Agents are autonomous, stateful programs (often LLM-driven) that can plan, reason, and act. And orchestration is critical to their success at scale. Why?

They depend on integrations. Agents frequently rely on external tools (APIs, databases, models), and these interactions must be managed.

They make dynamic decisions. Agents often refine outputs across multiple passes, much like hyperparameter tuning or recursive feature elimination in ML.

They delegate. One agent may invoke another agent, which may branch off new tools or workflows.

They require compute. Consider agents that decide to scrape the web or execute code. If we think of agents like software engineers, we quickly realize that orchestration without access to compute limits their autonomy.

Today’s AI development platforms must be able to orchestrate these stochastic systems end-to-end and dynamically, not just statically. Otherwise, we cut the agency out of the agents.

This mirrors insights from Anthropic: building effective agents means managing tools, adapting strategies, and orchestrating long-running background jobs reliably. Orchestration here isn’t a static DAG. It’s a dynamic loop.

The Future - AI Development Infrastructure

“By some estimates, more than 80% of AI projects fail — twice the rate of failure for information technology projects that do not involve AI.” - RAND

As ML and agentic systems mature in 2025 and beyond, the teams building them are discovering common AI development needs taking center stage. These are not hypothetical problems. We’ve seen them play out first-hand with actual customers and the products they’re building.

Dynamic workflows: Unlike static DAGs, dynamic workflows allow agents and AI systems to make on-the-fly decisions at runtime.

Flexible infrastructure integration: Orchestration must happen across clouds and clusters, in some cases dynamically switching to the source of the most affordable compute.

Cross-team execution: These platforms must unify individuals, teams, and agents in one development environment collaboratively, securely, and reliably.

Observability, reliability, and governance: Agents and workflows can run autonomously in a black box. AI development platforms should add transparency to reasoning, failures, data lineage, and resource usage.

Scale: Big data is getting bigger. Compute power is in higher demand than ever. Platforms need to reliably handle the scale requirements inherent in these systems.

Conclusion:

The AI Development Infrastructure Layer

We're entering a world where orchestration isn't just "what runs next," but “what thinks next”.

The evolution from static ETL pipelines to dynamic ML workflows has now converged with the rise of autonomous agents, and this convergence reveals a fundamental truth.

Agents and modern ML systems demand a new layer of our tech stacks: AI Development Infrastructure.

Agents and ML systems are inherently stochastic, making runtime decisions based on data they process, require dynamic compute allocation, and involve iterative refinement. Most critically, both demand orchestration that can adapt to changing conditions rather than simply executing predetermined, linear steps.

This convergence points to a single, unified orchestration abstraction that can deliver what both domains desperately need: durability for long-running processes that can't afford to lose state, dynamic compute provisioning that scales with demand, and fault tolerance that gracefully handles the inevitable failures in complex, distributed systems.

The future of orchestration powers the AI Development Infrastructure layer by being:

Dynamic - adapting workflow structure based on runtime conditions

Ephemeral - spinning resources up and down as workflows demand

Multi-agent and multi-human - orchestrating collaboration across autonomous systems and teams

Reliable and observable - providing visibility and recovery for systems that run autonomously

Secure - managing access and execution across diverse, distributed workloads

Orchestration is becoming the central nervous system of AI systems, powering this new AI Development Infrastructure layer of our tech stacks.

The question is no longer "how do I run this pipeline?" but “how do I enable systems to decide what to run, when to run it, and how to do it reliably at scale?”